Understanding Data Storage in Data Engineering: Insights from QuantumDataLytica

As the volume of data generated globally continues to increase-predicted to reach 181 zettabytes by 2025-the importance of efficient and scalable data storage systems in data engineering cannot be overstated. The ability to store, manage, and retrieve data efficiently is at the core of any data-driven organization’s success.

At QuantumDataLytica, we specialize in crafting data solutions that empower businesses to manage their data efficiently, ensuring that storage is not just about capacity, but also speed, reliability, and scalability. In this article, we explore the various types of data storage solutions available today, highlighting their advantages, challenges, and ideal use cases. We’ll also cover key considerations that data engineers must keep in mind when selecting a storage solution for their specific needs.

What is Data Storage in Data Engineering?

Data storage in data engineering refers to the process and technology used to capture, manage, and store data for easy access and efficient processing. As data engineers, the decisions we make regarding storage architecture influence how well a company can perform analytics, maintain operational efficiency, and scale to meet future demands.

At QuantumDataLytica, we understand that data storage is more than just a technical choice-it’s foundational to the entire data ecosystem. From ensuring seamless data access and processing to managing data integrity, security, and scalability, the right storage solution is key to data-driven success.

Types of Data Storage Systems

Selecting the right data storage system is crucial for any data engineering pipeline. Below, we’ll break down the main types of data storage systems that we commonly work with at QuantumDataLytica, exploring their strengths and ideal use cases.

1. Relational Databases (RDBMS)

Relational databases have been the gold standard for structured data storage for decades. They are ideal for transactional systems where data consistency and integrity are paramount.

Key Features:

- ACID compliance ensures reliable transactions.

- Optimized for complex queries and joins.

- Ideal for structured data with clearly defined relationships.

Common Use Cases:

- Financial applications.

- Customer relationship management (CRM) systems.

- Enterprise resource planning (ERP) systems.

At QuantumDataLytica, we help clients implement and optimize relational database systems, ensuring that they handle transactional workloads with optimal performance and scalability. Whether using MySQL, PostgreSQL, or Oracle, we ensure your relational database is tuned for your business needs. Learn more about our Data Engineering Services.

2. NoSQL Databases

For projects that require handling unstructured or semi-structured data at scale, NoSQL databases like MongoDB, Cassandra, and Redis offer the flexibility and performance necessary for real-time data applications.

Key Features:

- Schema flexibility, allowing storage of unstructured or semi-structured data.

- Horizontal scalability for handling high volumes of data.

- Ideal for low-latency, high-throughput workloads.

Common Use Cases:

- Real-time analytics and event logging.

- Social media data management.

- Internet of Things (IoT) and sensor data processing.

QuantumDataLytica integrates NoSQL solutions into big data architectures to help businesses manage massive, constantly evolving data sets while providing real-time insights. Explore our NoSQL Implementation Services.

3. Data Lakes

Data lakes are central repositories that allow organizations to store vast amounts of raw, unstructured data at scale. They enable flexible, schema-on-read processing, making them ideal for big data and advanced analytics.

Key Features:

- Schema-on-read allows for storing raw data, which is processed only when queried.

- Handles diverse data types (structured, semi-structured, and unstructured).

- Optimized for big data workloads, including machine learning and advanced analytics.

Common Use Cases:

- Storing logs, sensor data, and multimedia files.

- Preparing data for machine learning and AI models.

- Centralized storage for diverse data sources.

At QuantumDataLytica, we build and optimize data lakes on platforms like AWS S3, Azure Data Lake Storage, and Google Cloud Storage, enabling our clients to store and process large volumes of data flexibly and cost-effectively. For more on data lake solutions, visit our Data Lake Services.

Key Considerations for Data Storage

As experts at QuantumDataLytica, we advise clients to carefully consider several factors when selecting a data storage solution:

- Scalability: Can the solution handle future data growth? Cloud solutions typically offer better horizontal scalability.

- Data Consistency: Does the system guarantee data consistency in distributed environments? This is especially critical in financial systems.

- Security: Is the data encrypted both at rest and in transit? Security should be a top priority, especially for sensitive or regulated data.

- Cost Efficiency: Does the storage solution fit within the organization’s budget while providing necessary performance? Cloud storage offers flexible pricing, but it can become expensive without proper cost management.

- Performance: Does the solution meet the required speed for data access and query execution? Low-latency systems are critical for real-time analytics and operational workloads.

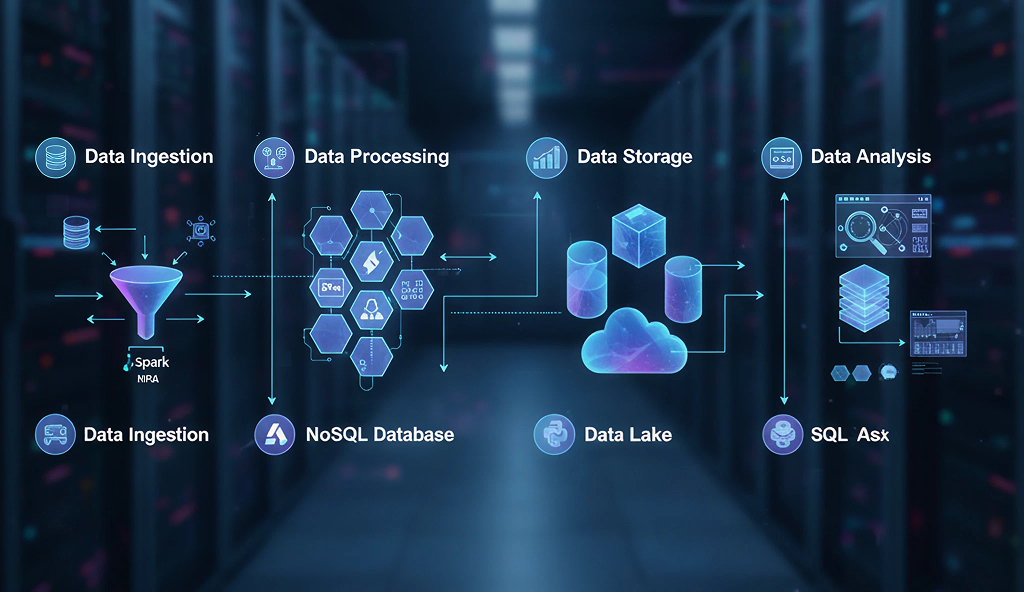

Data Storage in the Data Engineering Pipeline

In our data engineering work at QuantumDataLytica, we take a holistic approach to data storage within the broader data pipeline. Here’s how data storage fits into the overall workflow:

- Data Ingestion: Data from various sources (databases, APIs, sensors, etc.) is ingested into the system./li>

- Data Processing: The ingested data is processed and transformed using tools like Apache Spark and Apache Kafka.

- Data Storage: After processing, data is stored in the appropriate storage solution-whether in a relational database, NoSQL database, data lake, or data warehouse.

- Data Analysis: Data scientists and analysts query the stored data for insights, typically using SQL, Python, or BI tools.

At QuantumDataLytica, we ensure that each stage of the data pipeline is optimized for performance, scalability, and security, with storage solutions carefully selected to meet our clients’ specific needs.

Conclusion

At QuantumDataLytica, we understand that data storage is the backbone of any data architecture. The right storage solution-whether it’s a relational database, NoSQL system, data lake, or cloud-based storage-can make or break an organization’s ability to scale, perform analytics, and unlock insights from their data.

As data continues to grow, it is essential for organizations to adopt flexible, scalable, and secure data storage solutions. Whether you need help setting up a data lake, optimizing a data warehouse, or selecting the best cloud storage option, QuantumDataLytica is here to guide you through the process.

Our team of experts works closely with each client to craft tailored data storage strategies that align with their business goals. If you’re ready to take control of your data storage and accelerate your data engineering initiatives, reach out to us today.

Recent Blogs

-

Workflow Automation 31 Dec, 2025

HIPAA-Compliant No-Code Data Pipelines for Healthcare Providers

-

Data Management Innovations 29 Dec, 2025

The Rise of AI-Powered Data Pipelines: What Every Business Should Know

-

Workflow Automation 28 Nov, 2025

Unlocking Nested Automation: Introducing the Workflow-within-Workflow Feature

-

Workflow Automation 21 Nov, 2025

Webhook vs. API: Which Trigger Works Best in QuantumDataLytica Workflows?

-

Expert Insights & Solutions 19 Nov, 2025

From CRM to Insights: How QuantumDataLytica Automates Lead Nurturing and Account Management