How to Build an Efficient Data Engineering Pipeline

Building an efficient data engineering pipeline is essential for any organization that wants to make data-driven decisions.

Whether you’re dealing with real-time data streams or batch processing, your pipeline needs to be scalable, reliable, and efficient.

Let’s walk through the core steps to design and build a robust data engineering pipeline, ensuring that it’s future-proof and optimized for performance.

1. Define Your Data Pipeline Goals

Before jumping into tools or technologies, it’s crucial to understand your business requirements. Here’s where it all begins:

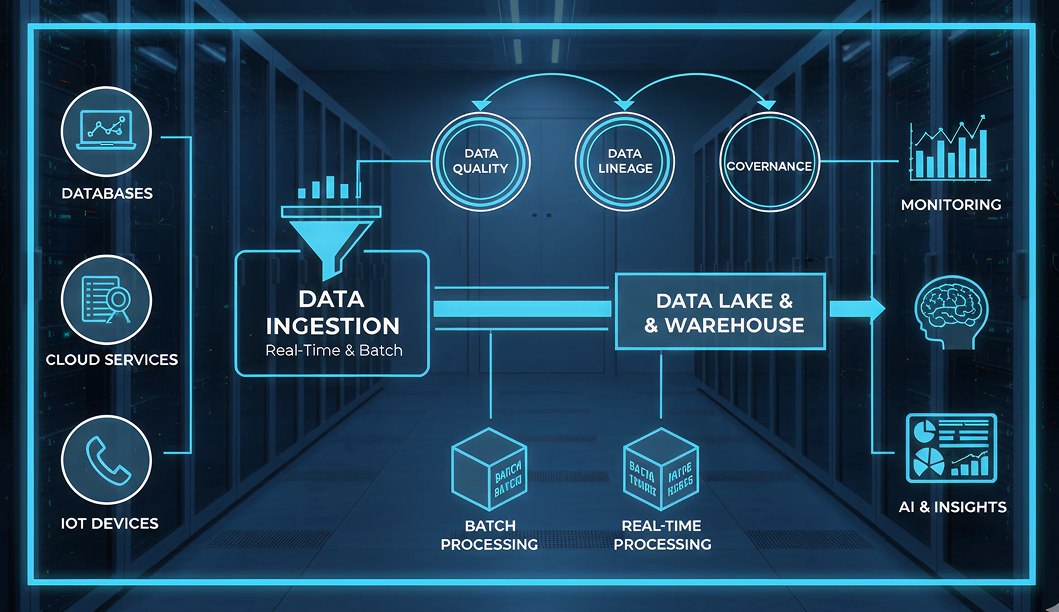

- Data Sources: Start by identifying where your data is coming from. Is it stored in databases, cloud services, or generated by IoT devices?

- Data Consumers: Who will be using the data? Think about data scientists, analysts, or business intelligence tools that need access to the processed data.

- Data Type: Is your data real-time or batch-based? Understanding this will influence the choice of tools and design of your pipeline.

- Objectives: What specific problems are you solving with your data pipeline? Are you aiming for faster insights, automating reports, or enabling predictive analytics?

By understanding these goals, you can ensure that the pipeline design aligns perfectly with your business needs.

2. Choose the Right Data Pipeline Architecture

Choosing the right architecture is one of the most critical decisions you’ll make when building a data pipeline. It determines how data flows, gets processed, and is stored. There are several architectures to consider:

- Batch Processing: This is ideal for processing large chunks of data at scheduled intervals, perfect for generating reports or running historical analysis.

- Real-Time Processing: If you need to process data as it arrives, such as for monitoring or fraud detection, real-time data processing is essential.

- Hybrid Architecture: A combination of batch and real-time processing, often using patterns like Lambda or Kappa, offers flexibility and ensures that you can handle both types of workloads efficiently.

Key Takeaway: The architecture you choose should match the speed and volume of your data processing needs.

3. Set Up Robust Data Ingestion Mechanisms

Data ingestion is the first step in any data pipeline. This is where data is brought into the pipeline for processing. You need mechanisms that are capable of handling both batch and real-time data efficiently.

- Batch Ingestion: Tools like Apache Sqoop are great for periodically loading large amounts of data from relational databases.

- Real-Time Ingestion: If you’re dealing with continuous data, message brokers like Apache Kafka or cloud services like Azure Event Hubs will handle real-time ingestion seamlessly.

- Change Data Capture (CDC): For incremental changes, implement CDC to efficiently capture and process data changes over time.

A well-designed ingestion layer will ensure that your data is reliably fed into your pipeline without bottlenecks.

4. Design Scalable Data Processing

Once your data is ingested, it’s time to process it. Scalability is key here. As your data grows, your processing should be able to scale efficiently. Consider the following:

- Parallel Processing: Distributed frameworks like Apache Spark or AWS Glue allow you to process large datasets in parallel, speeding up transformation tasks.

- Data Partitioning: Splitting large datasets into smaller, more manageable chunks will help ensure faster processing.

- Cloud-Based Solutions: Leveraging cloud-native tools like AWS Lambda or Azure Databricks provides flexibility to scale up or down based on workload needs.

Scalable processing ensures your pipeline won’t slow down as data volume grows.

5. Ensure Data Quality and Governance

Data quality is the foundation of any reliable data pipeline. Without clean, accurate data, insights become unreliable. Here are a few ways to ensure your data is of high quality:

- Data Validation: Implement validation checks to ensure that the incoming data is accurate, complete, and follows the defined format.

- Error Handling: Your pipeline should include mechanisms for detecting and handling errors automatically, such as retrying failed processes or sending error alerts.

- Data Lineage: Tools like Apache Atlas help track and visualize the journey of data throughout the pipeline. This ensures transparency and makes it easier to troubleshoot issues.

When you maintain high standards of data quality and governance, you build trust in the insights that your pipeline produces.

6. Monitor and Maintain the Pipeline

Once your pipeline is deployed, constant monitoring is essential to maintain its health. Here’s how you can stay on top of it:

- Logging: Detailed logs are crucial for debugging and identifying potential issues.

- Real-Time Alerts: Set up automated alerts to notify you immediately if something goes wrong, such as a failed process or unusual data behavior.

- Performance Metrics: Track key performance indicators (KPIs) like processing speed, failure rates, and resource utilization to ensure your pipeline is running efficiently.

Pro Tip: Make sure to implement proactive monitoring to identify issues before they impact your operations.

7. Optimize for Performance and Cost

You need to strike the right balance between performance and cost. If you’re over-allocating resources, you’re overspending. If you’re under-allocating, you’re slowing down processing. Here’s how to optimize:

- Caching: Storing intermediate results can significantly speed up your pipeline and reduce redundant computations.

- Auto-Scaling: Use cloud-based solutions to scale resources based on demand, ensuring you’re never overpaying for unused capacity.

- Cost Management: Monitor cloud usage closely to avoid overspending on storage and compute resources.

Pro Tip: Regularly review your pipeline’s performance and costs, adjusting as needed to stay within budget.

How to Build a Data Engineering Pipeline at QuantumDataLytica.com

Now that we’ve covered the general principles, let’s look at how to build a data pipeline using QuantumDataLytica.com, a no-code platform designed to simplify the creation of robust data engineering pipelines.

1. Get Started with QuantumDataLytica: Create an Account

First things first, sign up on QuantumDataLytica’s platform:

- Sign Up: Visit QuantumDataLytica and create your account.

- Dashboard Access: Once you’re in, log in to your dashboard, where you’ll manage everything from data sources to workflow orchestration.

2. Set Up Your Data Sources

QuantumDataLytica offers seamless integration with a wide variety of data sources.

- Choose Data Connectors: Select from pre-built connectors for databases, APIs, or cloud storage.

- Configure Connectors: Enter the necessary credentials and connection details, and test the connection to ensure everything is set up correctly.

3. Design Your Data Flow with Workflow Designer

QuantumDataLytica’s Workflow Designer allows you to visually design your data pipeline.

- Select Data Processing Steps: Choose steps like data cleansing, transformation, and aggregation.

- Add Quantum Machines: These are reusable components that handle specific tasks in your workflow.

- Design the Workflow: Arrange your data processing steps and connect them to create a seamless data flow.

4. Automate Data Ingestion

QuantumDataLytica simplifies data ingestion with automation features:

- Real-Time Ingestion: Set up continuous data ingestion using webhooks or Apache Kafka.

- Batch Ingestion: Schedule batch jobs at predefined intervals to pull data into the pipeline.

- Triggers: Set up automatic triggers to kick off workflows based on specific conditions or events.

5. Integrate Data Transformation and Enrichment

Data transformation is a critical step in refining your raw data:

- Data Cleansing: Automatically detect and remove duplicates or format data consistently.

- Data Aggregation: Aggregate data and calculate metrics using the platform’s built-in tools.

- Custom Transformations: For specific business logic, you can easily extend the platform with custom transformations.

6. Implement Data Governance and Quality Checks

QuantumDataLytica ensures your data remains high quality and compliant:

- Data Validation: Set rules to ensure the accuracy and consistency of incoming data.

- Error Handling: The platform has built-in retry mechanisms and alert systems for error detection.

- Data Lineage: Visualize how data flows through the pipeline and ensure compliance with data governance standards.

7. Orchestrate Your Data Pipeline with Scheduling

Scheduling ensures that your data processing happens at the right time:

- Automated Scheduling: Set workflows to run at specific intervals (e.g., daily or weekly).

- Real-Time Monitoring: QuantumDataLytica allows you to monitor your pipeline’s performance in real-time.

8. Monitor and Optimize the Pipeline

Once your pipeline is live, ongoing monitoring and optimization are essential:

- Performance Monitoring: Track processing speed and resource utilization using QuantumDataLytica’s monitoring tools.

- Alerting: Set up alerts to notify you of any issues, ensuring prompt resolution.

- Logging: All pipeline activities are logged for easy troubleshooting.

9. Scale and Expand Your Data Pipeline

QuantumDataLytica allows your pipeline to grow with your business:

- Auto-Scaling: The platform automatically scales resources based on workload demand.

- Adding More Sources: Easily integrate additional data sources as new needs arise.

- Expand to Multiple Environments: QuantumDataLytica supports multi-cloud environments, allowing you to extend your pipeline across different cloud providers.

Conclusion

Building an efficient data engineering pipeline is no longer a complex, code-heavy task. By using platforms like QuantumDataLytica, you can streamline the entire process with a no-code, intuitive interface while ensuring that your pipeline remains scalable, reliable, and cost-effective. Whether you’re dealing with batch processing, real-time data, or complex data transformations, QuantumDataLytica provides the tools to ensure your pipeline performs optimally.

FAQs

It typically takes just a few hours to set up a basic pipeline, depending on the complexity of your data sources and transformations.

Yes, QuantumDataLytica supports both batch and real-time data processing, making it versatile for various use cases.

Absolutely. QuantumDataLytica’s cloud-native infrastructure allows automatic scaling based on data volume and processing requirements.

Recent Blogs

-

Workflow Automation 31 Dec, 2025

HIPAA-Compliant No-Code Data Pipelines for Healthcare Providers

-

Data Management Innovations 29 Dec, 2025

The Rise of AI-Powered Data Pipelines: What Every Business Should Know

-

Workflow Automation 28 Nov, 2025

Unlocking Nested Automation: Introducing the Workflow-within-Workflow Feature

-

Workflow Automation 21 Nov, 2025

Webhook vs. API: Which Trigger Works Best in QuantumDataLytica Workflows?

-

Expert Insights & Solutions 19 Nov, 2025

From CRM to Insights: How QuantumDataLytica Automates Lead Nurturing and Account Management