The Rise of AI-Powered Data Pipelines: What Every Business Should Know

Introduction

Data has never been more valuable – or more difficult to manage.

Modern businesses collect information from dozens of systems: CRMs, PMS platforms, finance tools, marketing channels, IoT feeds, and third-party APIs. Yet most teams still rely on a fragile mix of scripts, scheduled jobs, and legacy ETL tools to keep everything running.

This approach doesn’t scale. Pipelines break silently. Changes take weeks. AI initiatives stall because the data underneath isn’t ready.

That’s why a new model is emerging: AI-powered, workflow-first data pipelines.

At the center of this shift is QuantumDataLytica, a platform designed to build data pipelines visually, reuse logic modularly, and prepare data for AI from day one.

The Data Problem Most Teams Face

Most data teams don’t suffer from a lack of tools. They suffer from fragmentation.

Over time, pipelines become duct-taped together using Python scripts, cron jobs, and point solutions that only one person understands. Business teams depend heavily on engineers, while engineers spend more time maintaining pipelines than improving them.

Collaboration breaks down because logic isn’t reusable. Every new use case becomes a new pipeline. Metadata lives in someone’s head. Governance is reactive. And when AI enters the conversation, teams realize their data isn’t structured, traceable, or reliable enough to support it.

The result is slow innovation, high operational cost, and missed opportunities.

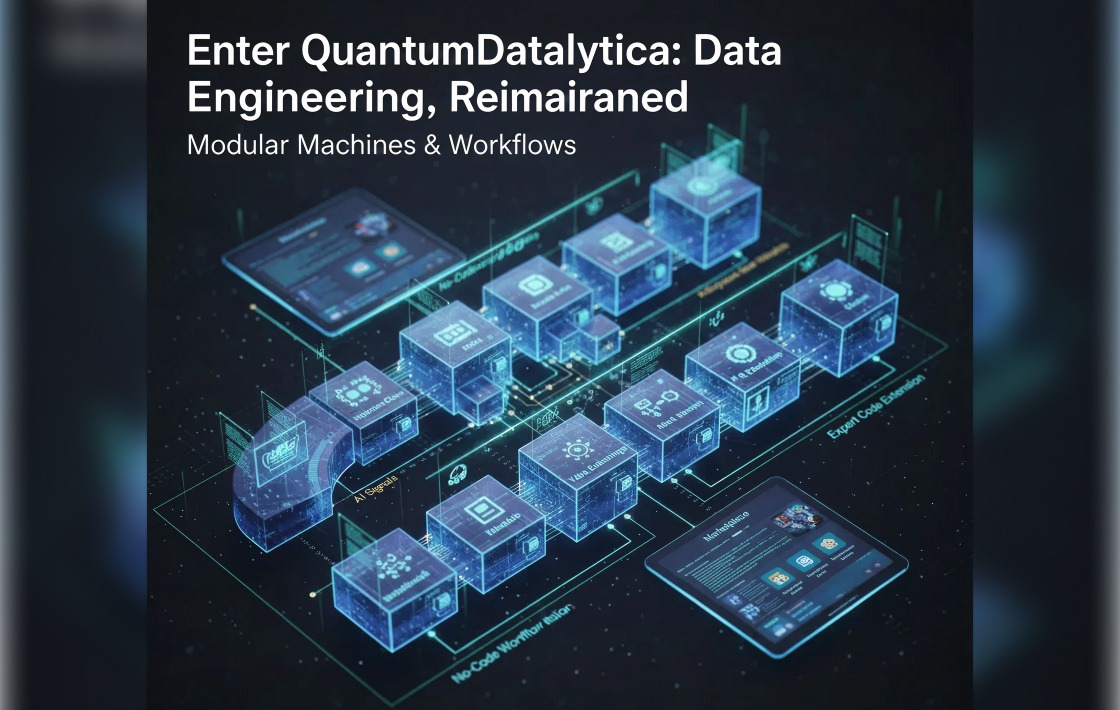

Enter QuantumDataLytica: Data Engineering, Reimagined

QuantumDataLytica approaches data engineering differently. Instead of starting with code, it starts with workflows – visual, modular, and AI-ready by design.

Modular Machines & Workflows

Every pipeline is built from reusable Machines: ingestion, cleaning, merging, validation, anomaly detection, enrichment, and dispatch. These Machines can be combined, reused, and nested across workflows, eliminating duplication and simplifying maintenance.

No-Code + Expert Mode

Business users can build workflows visually without touching code. When deeper control is needed, developers can extend Machines using Python, SQL, or JavaScript. One platform, two modes, no compromise.

Marketplace of Reusable Components

QuantumDataLytica includes a marketplace of prebuilt Machines – connectors, transformations, ML components, and industry-specific logic. Think of it as workflow reuse at scale, where teams don’t reinvent what already exists.

AI-First Data Architecture

Metadata, schema validation, lineage, and embeddings are built into the platform. This makes downstream AI use cases – forecasting, copilots, agents, and LLM workflows – far easier to implement.

Secure, Scalable & Observable

With role-based access, audit trails, logs, scheduling, webhooks, and APIs, QuantumDataLytica is built for enterprise-grade reliability without enterprise-grade complexity.

Sample Workflow: Hotel Chain Revenue Analytics

Consider a hotel group managing revenue analytics across multiple properties.

Each day, QuantumDataLytica pulls operational data from a Cloudbeds PMS, joins it with rate-shopping feeds and local event data, and applies anomaly detection to flag unusual pricing or demand shifts. The workflow then schedules automated BI report delivery and feeds cleaned, structured outputs directly into RevEvolve for forecasting and pricing strategy.

What once required multiple scripts, servers, and manual checks now runs as a single, reusable workflow – monitored, logged, and AI-ready.

| Feature | QDL | n8n | Airbyte | Alteryx |

|---|---|---|---|---|

| Visual Pipeline Builder | ✔ | ✔ | ✖ | ✔ |

| AI-Ready Architecture | ✔ | ✖ | ✖ | ✖ |

| Built-in Workflow Marketplace | ✔ | ✖ | ✖ | ✖ |

| Metadata Management | ✔ | ✖ | ✖ | ✔ |

| Nested / Reusable Workflows | ✔ | ✖ | ✖ | ✖ |

Teams choose QuantumDataLytica because it doesn’t just move data – it structures how data work gets done.

Getting Started

Getting started with QuantumDataLytica is intentionally simple.

You sign up, browse the Machine library, assemble your workflow visually, test it with real data, schedule execution, and deploy with full logging and alerts. No infrastructure decisions. No DevOps overhead.

Call to Action

Launch your free QuantumDataLytica account today

Or contact info@quantumdatalytica.com for enterprise onboarding and guided setup.

FAQs

Yes. You can build custom Machines using Python, SQL, or JavaScript and reuse them across workflows or publish them to the marketplace.

In spirit, yes. But QuantumDataLytica is designed for structured data pipelines, analytics, and AI workflows - not just trigger-action automation.

You stay in control. QuantumDataLytica connects to your sources, transforms data, and writes to your chosen destinations. Data ownership remains yours.

Recent Blogs

-

Workflow Automation 31 Dec, 2025

HIPAA-Compliant No-Code Data Pipelines for Healthcare Providers

-

Data Management Innovations 29 Dec, 2025

The Rise of AI-Powered Data Pipelines: What Every Business Should Know

-

Workflow Automation 28 Nov, 2025

Unlocking Nested Automation: Introducing the Workflow-within-Workflow Feature

-

Workflow Automation 21 Nov, 2025

Webhook vs. API: Which Trigger Works Best in QuantumDataLytica Workflows?

-

Expert Insights & Solutions 19 Nov, 2025

From CRM to Insights: How QuantumDataLytica Automates Lead Nurturing and Account Management